Ball Tracking

The ideas introduced in this tutorial have not been verified to be according to FIRST rules. Please verify with the rule manual on any modifications or unique usage of items provided in the FIRST kit of parts.

This tutorial assumes that you have some form of ground picking system that will attempt to pickup a ball in preparation for shooting. Automating this process of ball picking by pressing a button on your joystick will allow for rapid and precise acquisition of the ball into the robot. A camera mounted on the robot has a much better view of balls in the immediate surrounding than a driver viewing the robot from a distance at a sharp and almost planar view. Because of this advantageous viewpoint and automated control, a robot with a camera can acquire a ball much quicker and with less tries than a human driver counterpart ... unless of course you're one heck of a driver!

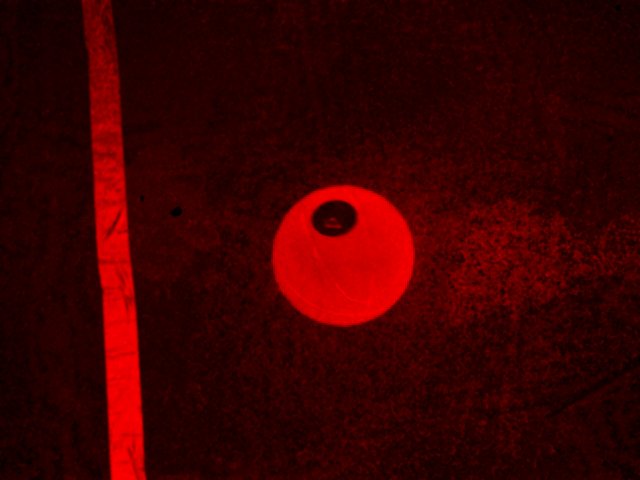

Let's first begin by using two images of the Rebound Rumble ball and create a detection system that will uniquely identify the ball.

The first and obvious trait of a ball is that it is orange. This feature is very useful in segmenting the ball from the rest of the image given that most shots of the ball are assumed to be from a height above the floor facing downwards in front of where the robot ball pickup mechanism is. This means most of the shot will be of the ground (typically a green or gray carpet), other robots, field lines (blue or red) and balls. Given the number of potential goal misses there will probably be a lot of balls to see on the ground!

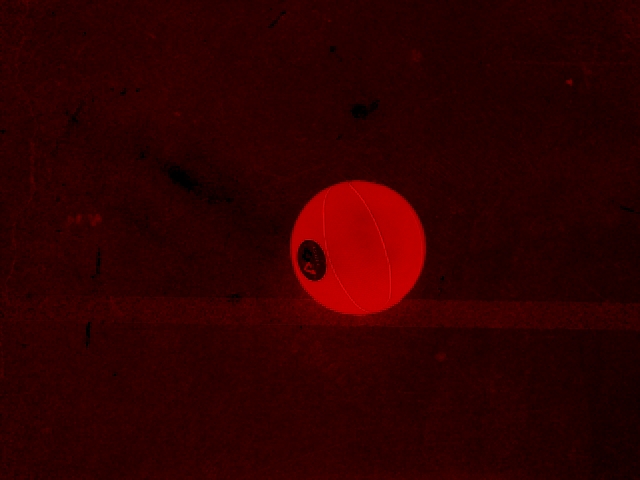

Using the RGB filter we can quickly eliminate all colors that are not red. There are still some parts of the image that have red components in them but in comparison to the orange ball (which has a lot of red in it) they are minor. While this does help to reduce all non-red components it does not remove those parts of the image that are reddish but not part of the ball.

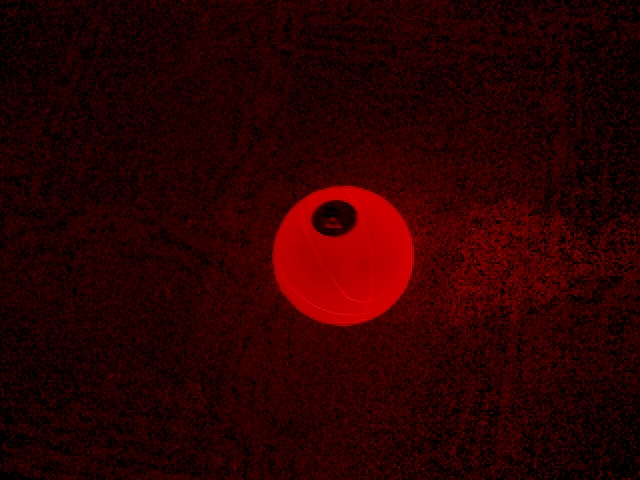

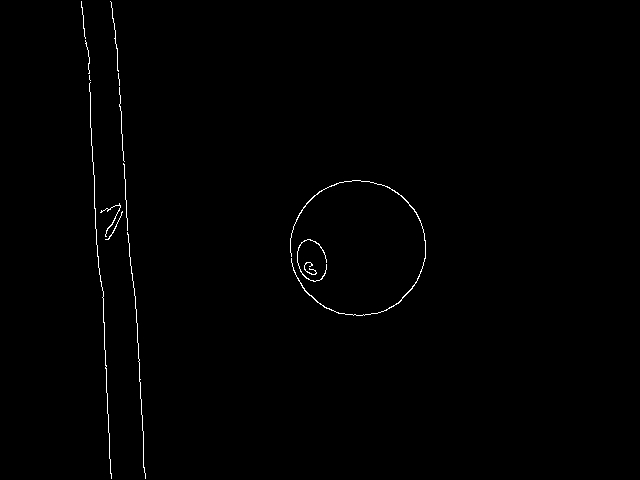

This requires us to use the second obvious property of the ball which is its shape. But before we can find the shape of the ball we need detect the edges of the ball that define its shape. We do this using the Canny edge detection.

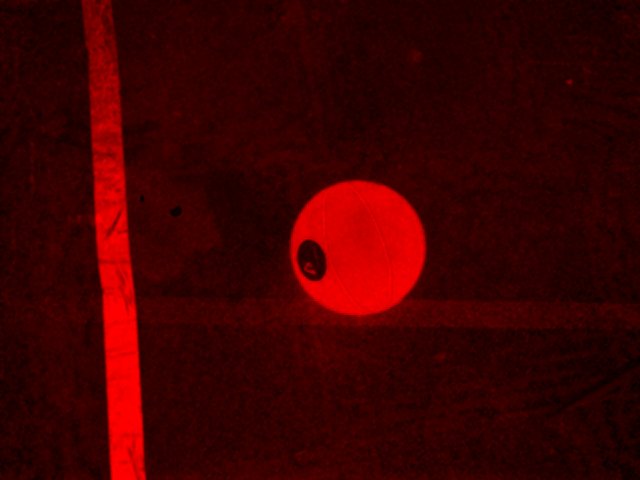

Now we can detect which part of the image contains a circle. Since they are well defined we can include a high threshold value for "circleness" in order to eliminate parts of the image that we do not consider circles.

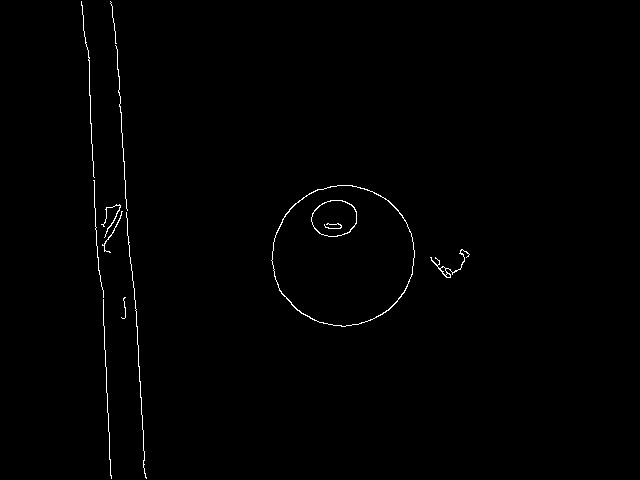

The final criteria we use with the Circles module is the allowed size of the circle. Since the camera is pointing towards the floor the size of the ball will only change slightly depending on if it is close or far from the robot. Since this will always be the case we can limit the detection size of circles to a tight range to further eliminate parts that look like round reddish circles but are the wrong size.

Finally we add in a filter to eliminate all but the largest circle based on the assumption that we only want to try to move and capture the nearest ball. As the nearest ball will always be the largest ball this is an easy feature that guarantees that we capture the easiest target.

To better illustrate the ball identification process and to attempt it on more images we take that end result, mask it back into the original image, recolor it to a green ball, and then combine that back into the source image. In effect, this allows us to see detected balls in green and irrelevant parts of the image in a darker intensity.

The recoloring of the ball works so well that it may appear we have somehow "cheated". To prove the point we took more images using the RGB channel of the Kinect which show the ball recolored but with bumpers in the way. Note that the circle sizes we used for the images above were larger than the ones we used for the Kinect. The ball and the bumper are both detected by the color filter but the bumper is eliminated afterwards by the circle detection.

Now that we have a single ball detected we need to know how to move the robot relative to the location of the ball. If you were lucky enough to place the camera in the middle of the ball picking mechanism you can now direct the robot to the center of the ball through rotation of the robot. Once centered you can then move the robot forwards until the ball disappears. The location of the ball has already been calculated by the circles calculation and is contained by the CIRCLES array (first two numbers). We can see this by drawing a line from the ball to the center of the screen. The steering of the robot can then be done in an incremental manner similar to what was described in the Tracking tutorial.

To try this out yourself:

- Download RoboRealm

- Install and Run RoboRealm

- Load and Run the ball recognition

robofile which will recolor a detected ball green.

robofile which will recolor a detected ball green.

- Change the min and max circle sizes in the Circle module to adapt the system to your camera height.

If you have any problems and can't figure things out, let us know and post it

in the forum.